Streamlining Analysis with Data Processing

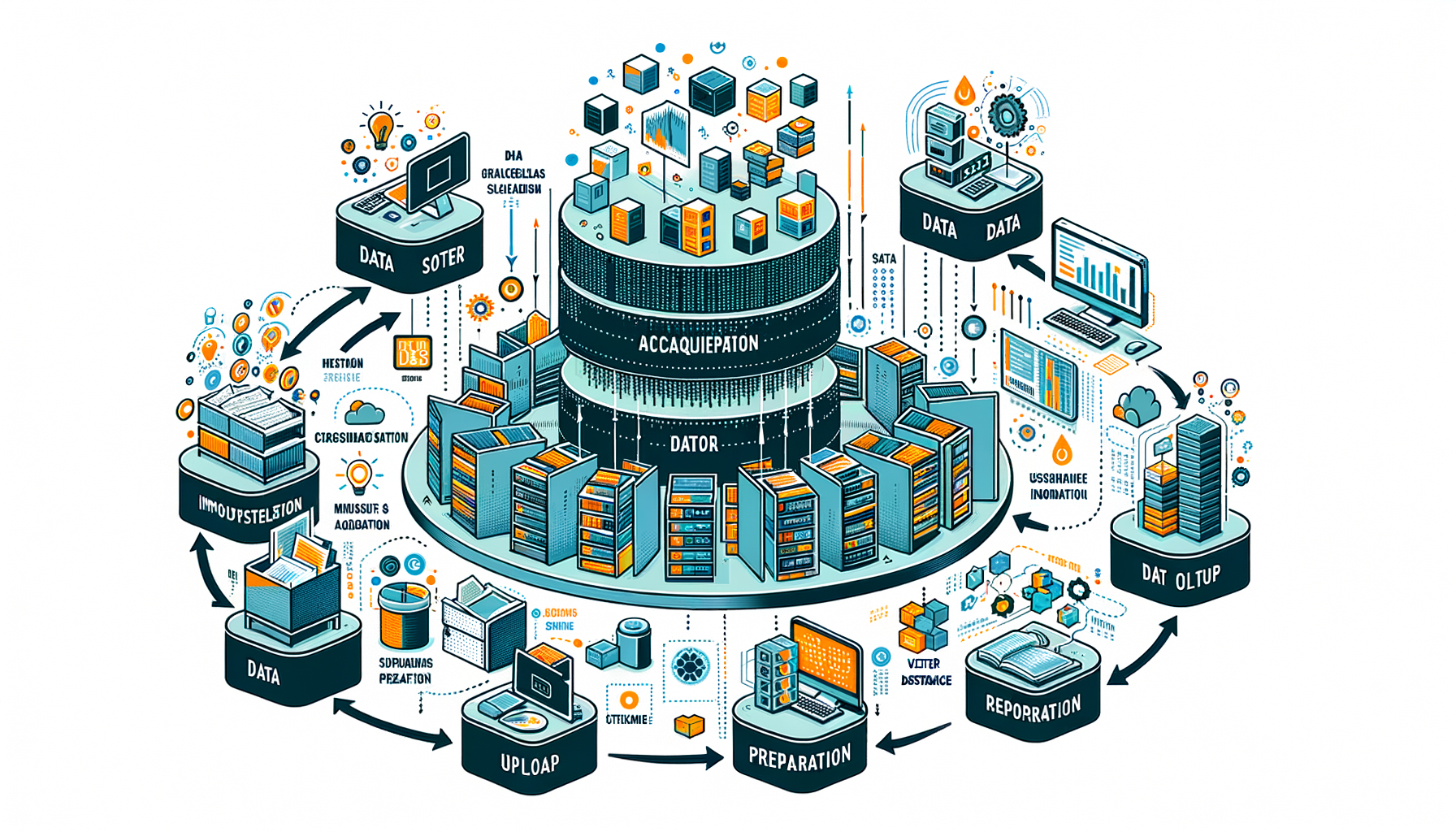

The data processing pipeline is a critical component of our project, designed to handle the raw content retrieved by the web scraper and prepare it for further analysis. Its primary purpose is to parse and chunk the content, breaking it down into manageable segments suitable for subsequent processing and extraction of insights. By organising the data in a structured format, the pipeline lays the foundation for effective analysis and interpretation, enabling us to derive actionable insights to inform disaster response and community engagement efforts.

The implementation of the data processing pipeline is essential for several reasons. Firstly, the raw content retrieved by the web scraper often comprises lengthy documents or web pages, including both text and PDF files. Manual analysis of such content would be impractical and inefficient. The pipeline automates the process of content parsing and segmentation, enabling us to handle large volumes of data with ease. Additionally, the structured format generated by the pipeline facilitates subsequent analysis techniques, such as natural language processing and sentiment analysis, by providing a clear and consistent data structure.

In conclusion, the data processing pipeline serves as a crucial intermediary step in our project, transforming raw content from both text and PDF files into a structured format suitable for further analysis. By automating the process of content parsing and segmentation, the pipeline enhances the efficiency and scalability of our data processing workflow. With its ability to handle diverse types of content and generate structured data representations, the pipeline lays the groundwork for extracting actionable insights to support disaster response and community engagement efforts.